Projects

Our research investigates the way in which we use our senses to understand the world and others in it.

- Our experimental approaches capture the dynamic way in which we perceive, process and respond to sensory social cues.

-Our projects highlight the benefit of a multilevel perspective to explain the way we experience the world using neurophysiological measures, subjective report (including microphenomenology)

-We strive to create research that is generalisable, open and impactful

If you want to participate in our ongoing experiments, please contact us on participate@contaktlab.space

Trusting ourselves and others

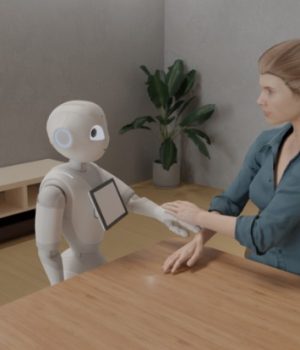

- Trust through touch: bridging the gap in human robot interactions through reciprocal affective touch

Our social ties are often based on trust: the willingness to rely on another person when one’s own resources are not sufficient for a certain task or problem. We study the link between trust and affective touch to understand if skin-to-skin contact with the other promotes perceived trustworthiness. We are specifically interested in the power of trust and touch in human-robot interactions, to understand whether robots can be perceived not only as tools but also social partners we can trust (and potentially touch).

- Certainty and touch

Social interactions are often dominated by uncertainty. A certain word or look can have very different meanings depending on the context in which they are exchanged and their value (positive, negative or neutral) is often ambiguous. We are interested in understanding whether affective touch may have a facilitating role in the interpretation of other social cues, especially when ambiguity and uncertainty are high, as in human-robot interactions.

Representations of self and other

- Self / other touch

- Joint action and self / other boundaries

- Touch medicine?

Multimodal measures of social interaction

- Capturing coordinative creativity

From a string quartet, a group of basketball players or a surgical team operating, we often have to temporally coordinate our actions with others. This project explores the factors of “who” we interact with and “how” we interact with them to better understand successful coordination. Collaborators (logos)

- Multilevel analysis of biomarkers of social affective touch.

When our skin is touched, depending on where, how fast and how warm that contact is will result in the activation of different skin receptors and afferents that project to areas of the social brain. That means that our brain detects not only basic sensory features but rather it helps us to make sense of the emotional meaning of touch. We use neuroimaging techniques such as the functional Near InfraRed Spectroscopy (fNIRS) to capture the neural hubs that selectively respond to pleasant affective touch with emotional meanings.

The physiological markers of social affective touch

Being touched by someone activates a series of physiological changes. We measure physiological responses to affective touch, such as heart rate and skin conductance, which are affected by how positive and arousing touch is perceived. We’re also testing physiological responses to digital versions of affective touch, delivered via haptic actuators developed at the Chair of Acoustics and Haptics.

Multisensory experiences of our world and others

- Multisensory experiences of affective touch

- Multisensory enhancement in clinical contexts (Synergia, Veiio)

- Lullabies and touch

- Sonification and augmented reality

Sensory information can be extracted from the world through multiple senses but we typically rely on vision. However, research into sensory substitution and augmented reality have shown that we can tune our experiences by extracting information through our other senses. In this project, we are exploring ways to augment reality by tuning in information through the auditory channel – by sonifying information into music or informative sounds that make us better able to make sense of a given context.

Digital / virtual touch

We hold hands, pat each other on the back and caress one another to provide comfort and to communicate. A large part of our work and projects funded by the Center for the Tactile Internet with Human in the Loop (www.CeTI.com) explore the cognitive mechanisms and neurophysiological correlates of social affective with a focus on to bring affective touch in the digital realm.

Virtual Touch

Virtual reality offers great potential for facilitating communication and social connection beyond physical distance. However, virtual interactions often are limited to visual and auditory experiences and touch is often left out. Our projects investigate the power of affective touch in virtual social interactions. We aim to understand which types of interpersonal touch may be optimal in virtual reality by measuring people’s subjective experiences, behaviours, and neurophysiological responses. This powerful and innovative approach to studying affective touch allows us to tease out individual differences related, for example, to age and cultural norms.

HandsON

The HandsOn self-help app and the Virtual Touch digital toolbox, developed by an international team of experts in the fields of neuroscience and technology, invite people to explore their personal relationship with touch, to discover what it means to them and learn about their influence on well-being and social relationships. We are particularly interested in context-dependent and gender-specific differences during adolescence: “When, by whom and where is it particularly pleasant for adolescents to be touched? To address this question we are collaborating with schools to offer students the opportunity to try the HandsOn App, learn more about themselves and become scientists in the affective touch field.

For more information, https://www.unibw.de/virtualtouch/handson

We are currently running several citizen science studies investigating touch behaviours and preferences in various populations and across age groups. For more information and to get involved, click here.

Touch at a distance (ETC)